Data Factory - "Data Production" for the training of AI software

A so-called data factory is an enabler for systematically provision of large amounts of data for the training of AI software; for instance to train sensor-based perception systems for observing the railway environment. This is an important step towards the fully automated driving.

In all industry sectors that use autonomous or semi-autonomous systems (such as autonomous driving on the road or in robotics), the sensor-based perception systems are of central importance. In combination with intelligent software, these systems can recognise, classify and analyse what they detect. In the rail industry, such systems are being tested in particular for the fully automatic driving of trains. Digitale Schiene Deutschland is currently testing integrated systems consisting of environment perception, localisation and highly accurate digital maps that provide the most precise information about the train's environment and its exact position in real time. In the Sensors4Rail project, Digitale Schiene Deutschland is currently testing such systems in practice.

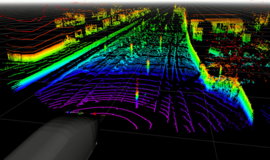

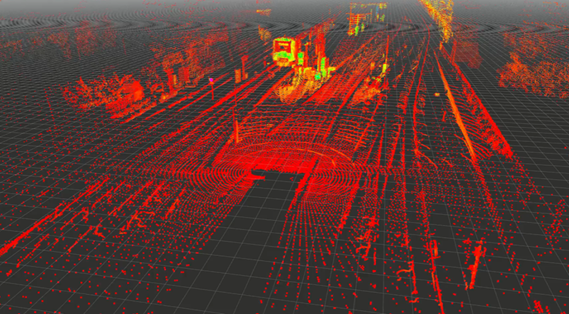

Sensor-based perception systems are based on data from various sensors; for example, cameras, infrared cameras, radar and Lidar (laser scanner, see Fig. 1).

he data from these sensors is processed and interpreted using AI software from the field of machine learning. This enables these systems to recognise their surroundings and objects such as trains, tracks and power poles. Through an intelligent combination of AI software and a powerful computing environment, the detection of static and dynamic obstacles on and next to the track as well as hazard assessment is possible. This plays a decisive role both for the development of possible assistance systems and for fully automated train operations.

Among other things, a geo-positioning system (e.g. GNSS - Global Navigation Satellite System) is used to localise the train. However, this alone is not sufficient for precise track localisation. Therefore, a highly accurate localization is derived by comparing the sensor data with a previously created digital map of the railroad environment. The AI software recognises unchangeable static objects (e.g. electricity pylons) in the vicinity of the train and assigns them to known objects on the digital map in the next step. This so-called landmark-based localisation ensures localisation with centimetre accuracy.

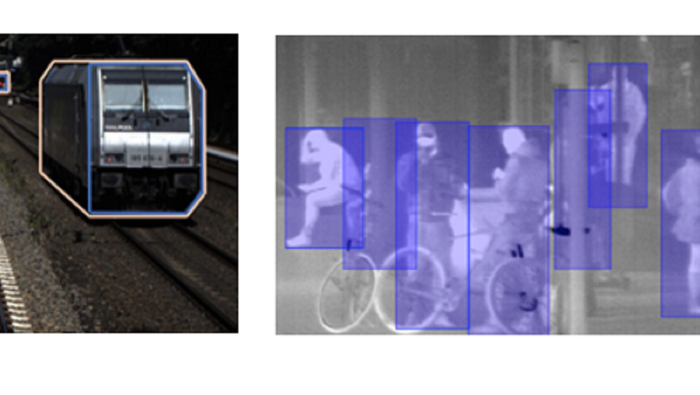

To develop such AI software for environment perception, very large amounts of data with very high data quality are required. For example, tens of thousands of "training images" are needed to reliably train an AI software to recognise certain objects. It is important for the training that the image areas with the objects to be learned are labeled beforehand during data preparation (see Fig. 2). These marked image areas are called annotations.

This large amount of data can be collected on the one hand by recording sensor data in the railway environment and on the other hand by simulating artificially generated data. In the latter, artificial sensor data is generated - comparable to the simulated 3D environments of computer games. The additional simulation of sensor data is necessary because it is impossible to capture all conceivable events and specific cases through recordings of regular rail operations. Furthermore, the simulation of data represents a significant cost saving compared to real recorded data.

Finally, a mixture of recorded real data and artificial simulation data creates the data basis for training the AI software. With this data basis and the help of high-performance computers, the development of an AI-based object recognition and train localisation is possible.

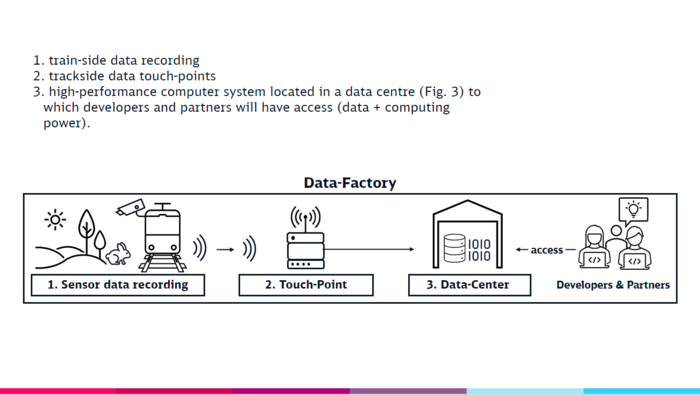

To continuously improve and further train the AI software, a so-called "data loop" is required. This is a circular flow of data consisting of real data and simulated data, which always keeps the database up to date. In order to provide the immense amount of data and the data loop for the continuous training of the AI software, Digitale Schiene Deutschland has now started to build up a so-called data factory, which will consist of three core components.

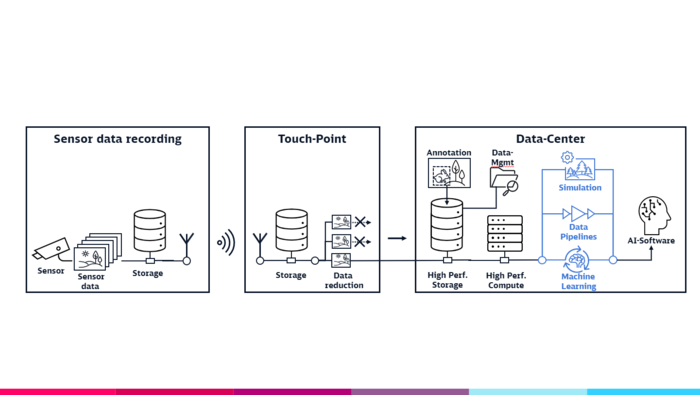

The touchpoints of the data factory, which are still to be developed, will enable the data flow of large volumes of recorded sensor data from the train to the data centre. A touchpoint will connect wirelessly to a train within range and download the large amount of sensor data that the train has collected during its journey. The data is then pre-processed and pre-sorted (data reduction) in the touch-point so that only the relevant portion of the data needs to be transmitted to the data centre (Fig. 4 left, centre). The amount of data required for training the AI software will be very high in the beginning, but will decrease as training continues.

In the data centre, the data is saved in a large data storage. In the following, annotations are created for the recorded sensor data and made manageable, visualisable and searchable by the data management (Fig. 4 right).

A system of high-performance computers is connected to the large data storage. This contains a software-based tool chain (Fig. 4, blue) for the development of the AI software. This includes tools for simulation, data pipelines for further processing and qualitative enhancement of the data, and tools for machine learning. This is used to train and test the AI software for environment perception.

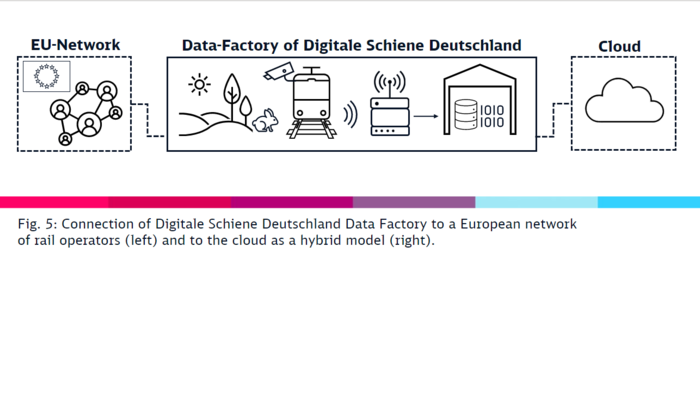

Since cross-border traffic is very important for the rail industry, a further expansion stage of the data factory is also to be realised on a European level. For peak loads and special computing operations, the data factory will then be connected to a cloud environment. This approach is called a hybrid model. The aim is to use a European networked data factory to achieve synergy effects with other European rail operators and to create a basis for Europe-wide standards in the digitization of the rail system (Fig. 5). This is part of European initiatives such as ERJU (Europe's Rail Joint Undertaking).