Data Factory

A data factory is used to systematically generate, process and make available large volumes of necessary data for the various use cases of the digitalized rail system. One prominent use case is the training of systems that work with artificial intelligence (AI), such as environment and obstacle detection. The database consists of the recording of real sensor data from the track environment on the one hand and simulated, artificially generated images on the other.

Project duration

Our partners

Digitale Schiene Deutschland has started to set up a "data factory" in 2022. In future, it will be possible to systematically generate, collect and provide large volumes of data required for the various applications of the digitalized rail system. On the one hand, digital systems generate large amounts of data, such as sensors and cameras on trains that monitor the track environment in detail. On the other hand, digitalization also requires the systematic generation of data in order to be able to adequately "train" artificial intelligence (AI), for example. One popular use case is the "training" of AI software for sensor-based perception systems. This is particularly needed in the context of fully automated, driverless driving (so-called Grade of Automation 4, GoA4). Large amounts of real sensor data and simulated data with very high data quality are required to develop such AI software, e.g. for environment perception.

Organizing such data for the training of AI-based functions via a uniform cloud and IT platform is a major challenge. The platform should enable the processing and use of this data by various stakeholders (operators, manufacturers, AI experts, etc.).

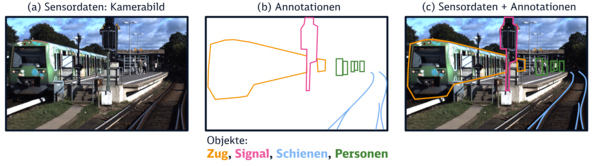

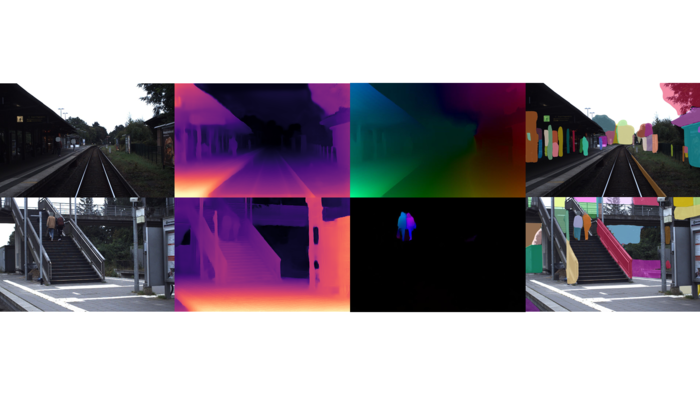

The required data volumes can be generated on the one hand by recording real sensor data in the track environment and on the other hand by generating artificial data with various simulation environments. The recorded real data must be processed afterwards and the image areas with the objects to be learned must be marked beforehand. These marked image areas are called annotations.

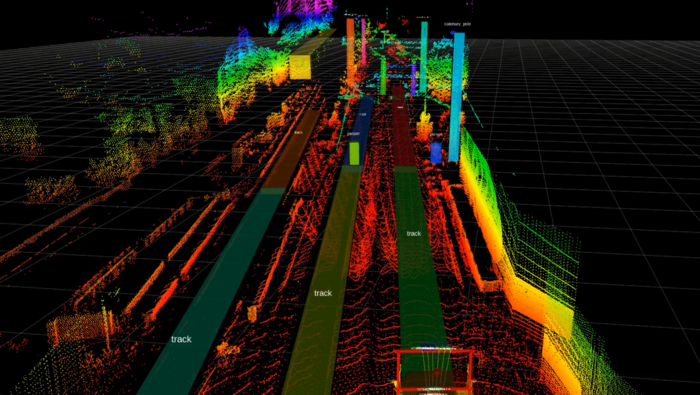

In the simulation of artificial sensor data, data is "produced" - comparable to the simulated 3D environments of computer games. This additional simulation is necessary because it is impossible to capture all conceivable events and special cases of rail operations by recording regular rail operations. In addition, the simulation of data represents a significant cost saving compared to real recorded data.

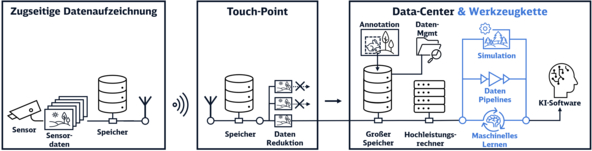

In order to continuously improve the database for training AI software, a so-called "data loop" is required. This is a data loop consisting of real data and simulated data. Three steps are necessary to provide the immense amount of data and the data loop for the continuous training of AI:

- The data detected by the sensors on the train is recorded during the train ride. Touchpoints that connect wirelessly to a train within range download the large amount of sensor data collected. The data is pre-processed and pre-sorted there (data reduction).

- The relevant part of the real data is then transmitted to the data center. The real data is managed, visualized and made searchable and brought together with the simulated data.

- The simulated data is also transferred to the data center via a software-based tool chain. This task requires a high-performance state-of-the-art infrastructure with high-performance processors, enormous storage capacity and a cloud connection.

The simulated data is also transferred to the data center via a software-based tool chain. This task requires a high-performance infrastructure with high-performance processors, enormous storage capacity and a cloud connection.

- Training of AI software for environment perception and obstacle detection during train movements

- Enabling the use of sensor-based perception systems for on-board route monitoring by the train

- Basis for the realization of fully automated driving

- Cloud connection for the realization of a European networked data factory

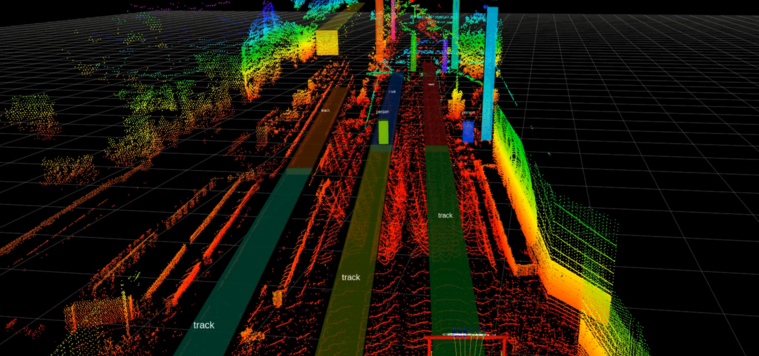

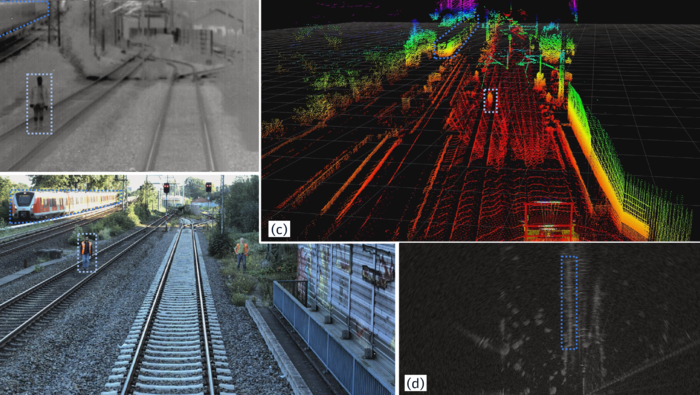

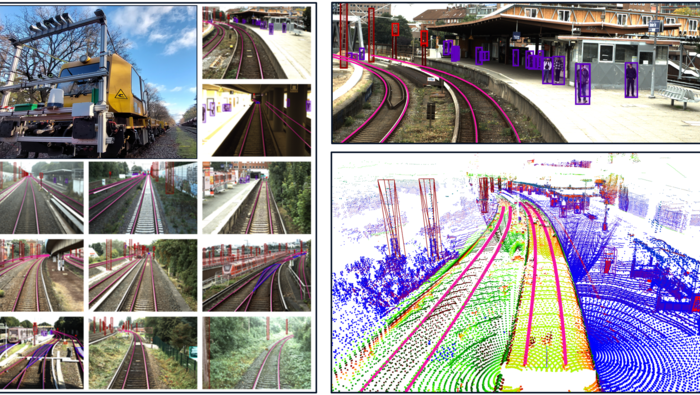

To date, there are hardly any public data sets from the rail sector. DB InfraGo AG has therefore created and published the first publicly available multi-sensor data set OSDaR23 as part of the "Digitale Schiene Deutschland" sector initiative together with the German Centre for Rail Traffic Research (DZSF) at the German Federal Railway Authority (EBA).

The data set consists of time-synchronized sensor data from:

- 3 high-resolution cameras, 3 medium-resolution cameras, 3 infrared cameras

- 3 long-range LiDARs, 1 medium-range Lidar, 2 short-range LiDARs

- 1 long-range radar, 4 inertial measurement units, 4 GPS/GNSS sensors

The data set contains annotations of 20 object classes, has the annotation format ASAM Open Label and can be downloaded here.

Further Information:

https://digitale-schiene-deutschland.de/Downloads/ETR-OSDaR23.pdf

https://digitale-schiene-deutschland.de/en/news/2022/Data-Factory

The evaluation of sensor data in fully automated driving is also likely to be carried out by AI models developed using machine learning (ML) algorithms based on suitable training, validation and test data. The basis for ML are data sets from input and output data, which an ML algorithm can use to "learn". In the case of object recognition in ATO, the input data is sensor data, for example, which records the relevant areas, e.g. the route in front of the train. The objects to be recognized (e.g. people, tracks) must also be detected. The output data includes all data that the ML model to be developed is to derive from the input data. This includes, for example, location coordinates of the areas in which the objects to be recognized are located, classifications of the objects or characteristics of object attributes.

Due to the large amount of data that will be required for the development, testing and approval of the ATO functions, at least semi-automatic pre-analysis appears to make sense. Certain objects, situations or environmental conditions can then be automatically identified in the recorded data. This makes it possible to find specific data - such as recorded wild animals or objects located at particularly relevant distances or zones around the track. These can then be annotated so that they can be used to train the AI processes. Special weather conditions such as rain or driving snow can also be automatically recognized and added to the data as a machine-generated description. In future, the overall assessment of the situation, i.e. how relevant the data is for AI training or testing, will also be evaluated using the computer.

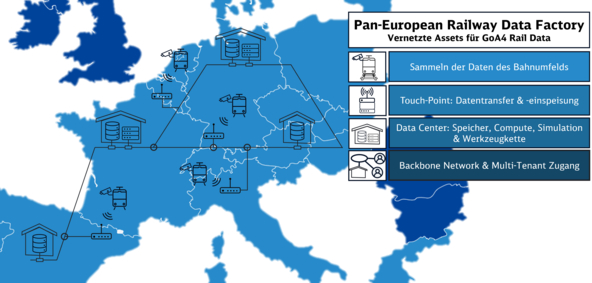

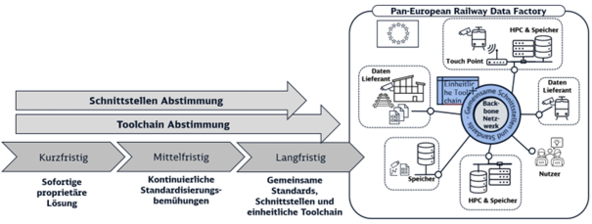

Setting up and operating a data factory for a fully digitalized rail system is a major task. There is therefore a consensus in the rail sector that individual railroad companies or manufacturers will not be able to provide enough sensor data in the future to be able to train the numerous AI functions sufficiently. The European rail sector is therefore considering the creation of a "pan-European Railway Data Factory" with a shared infrastructure that will enable rail companies and manufacturers across Europe to collect, process and simulate sensor data and make it available for mutual use.

The implementation strategy for the Pan-European Railway Data Factory (PEDF) is divided into short, medium and long-term measures. In the short term, the focus is on individual technical and legal solutions for individual national data factories. In the medium term, the aim is to assimilate standards in order to enable the gradual integration of the data factories of individual members. In the long term, the aim is to achieve comprehensive coordination of standardization efforts, particularly with regard to data quality, formats, interfaces and interconnectivity.

The participation paths for PEDF members include interface coordination for flexibility in data exchange and toolchain coordination for the harmonization of tool chains. The strategy is characterized by its pragmatic and step-by-step development to make PEDF a versatile and effective pan-European initiative.

Digitale Schiene Deutschland therefore helped launch the "Rail Data Factory" project as part of the "CEF2 Digital" funding program and conducted a study co-funded by the European Health and Digital Executive Agency (HADEA) together with the French railroad SNCF and the Dutch railroad NS. The aim was to assess the feasibility of a Pan-European Railway Data Factory from a technical, economic, regulatory and operational perspective. The study started in January 2023 and was completed in December 2023. A so-called Rail Advisory Board and close synchronization with data factory-related activities in the Europe's Rail funding project "R2DATO2" ensure that the study takes into account the needs of the rail sector and was carried out in line with comparable activities.

In addition to the development of the architecture and an implementation plan, a key result was the confirmation that the establishment of a Pan-European Railway Data Factory is highly relevant for the project participants.

Further Information:

https://digitale-schiene-deutschland.de/en/news/2023/Pan-European-Railway-Data-Factory

Project duration Pan-European Railway Data Factory

The ERJU "R2DATO" project (ERJU = Europe's Rail Joint Undertaking) aims to develop a joint innovation roadmap for rail operators and manufacturers for future Europe-wide digital and automated rail transport and to develop and test the necessary technological enablers for this.

On the one hand, aspects of the requirements for the Data Factory will be developed and shared with the project members. On the other hand, the Data Factory prototype of Digitale Schiene Deutschland will be built in parallel. The requirements focus on the assets in the data center and the future tool chain as well as on data quality and annotation.

The tool chain includes a data platform that handles data management and visualization. It also includes tools for annotating (sensor) data and a simulation platform that synthesizes artificial data (see section 2). The training and evaluation of AI functions is carried out in the machine learning platform, which is also part of the tool chain. The Testing & Certification Platform is intended to support the future approval of AI functions and an Access & Information Platform ensures the seamless interaction of the individual tools.

Building on the results of the CEF2 study (section 6), the concept of the Pan-European Data Factory is being further pursued and developed here. The aim is to merge independent data factories and IT assets using a high-speed network, define common interfaces and create a standardized toolchain.

The standardized toolchain is intended to ensure data sovereignty and enable non-discriminatory access to data for stakeholders. In addition, it should create synergies in data collection, data processing and AI development and enable the approval of AI functions.

A legal opinion will clarify which areas of law are relevant in the R2DATO WP7 project. This expert opinion will thus form the basis for the further development of the concept of a consortium-led pan-European data factory.

Another component of the work package is the sector-wide coordination of data simulation and data annotation. In cooperation with the project partners, the first step is to simulate non-regular scenarios and annotate sensor data.

The digital map (digital Register WP27) will provide exemplary map data.

Finally, an open data set will be created containing real sensor data, annotations, simulated sensor data and map data.

Project duration

Our Partners

Specialized articles

-

Study result "D1 - Data Factory Concept, Use Cases and Requirements" | June 2023

The "pan-European Railway Data Factory" is a kind of ecosystem with a shared infrastructure that enables rail companies and manufacturers to collect, process and simulate sensor data across Europe and make it available for mutual use.

-

Open multisensor data set for the development of environment perception in fully automated driving | April 2023 (only in German)

Machine learning methods will also be used for environment perception in automated driving in railroad operations. However, the data sets required for their development are currently hardly available to the public. Such a multi-sensor data set was created and published in a project by the DZSF and DB Netz AG as part of the Digitale Schiene Deutschland sector initiative.

Source: Eisenbahntechnische Rundschau